Look @ Me

Sponsored Program with Meta

2022, Personal Project

Tools: Unity, Oculus Quest 2

What might be the new social contract of taking photos in Mixed Reality?

In this speculative mixed reality environment, a self-trained camera captures precious moments beyond our attention. These moments may be user-initiated or system-initiated. In this project, I explored the key moments that might capture the attention of computational eyes, going beyond human thinking processes.

In this speculative mixed reality environment, a self-trained camera captures precious moments beyond our attention. These moments may be user-initiated or system-initiated. In this project, I explored the key moments that might capture the attention of computational eyes, going beyond human thinking processes.

Prototype

Prototyping in Unity, cameras targeting on the user based on different inputs

Brief

Look @ Me is a project that explores how we are perceived, captured, and documented by other users and computational devices. This is a project about cameras and a Look At Dataset in Mixed Reality. Built on Unity, this project created a Virtual Reality environment to simulate the actual situations that might occur in MR. By exploring and prototyping different input methods of MR, the shutter click could be triggered by outstanding colors, skin glows, loud or trivial sounds.

Design Questions

What is the social contract of taking photos in MR?

When should a vision model look and not look?

How to grab attention in MR?

What could be designed as a safeguard to opt out?

When should a vision model look and not look?

How to grab attention in MR?

What could be designed as a safeguard to opt out?

Concept

“This is the moment I want to stand out and grab attention.”

A new social contract where I give my consent to be archived.

The vision model is embedded in the camera in MR. With a self-trained AI model, users won't miss the chance to capture those meaningful moments that would otherwise be lost.

A new social contract where I give my consent to be archived.

The vision model is embedded in the camera in MR. With a self-trained AI model, users won't miss the chance to capture those meaningful moments that would otherwise be lost.

Semiotic Library, Look At Dataset

I explored various types of inputs as trigger in Unity.

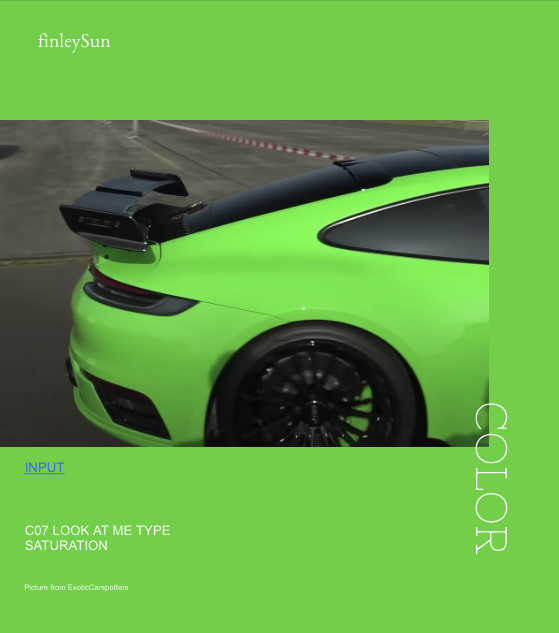

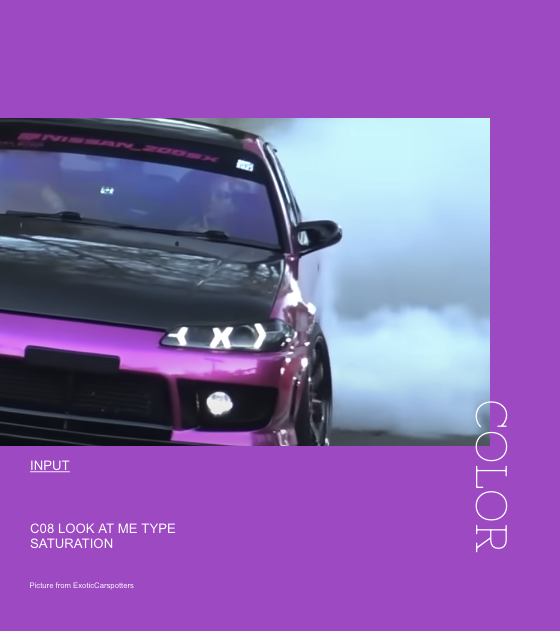

What if hues could trigger the camera?

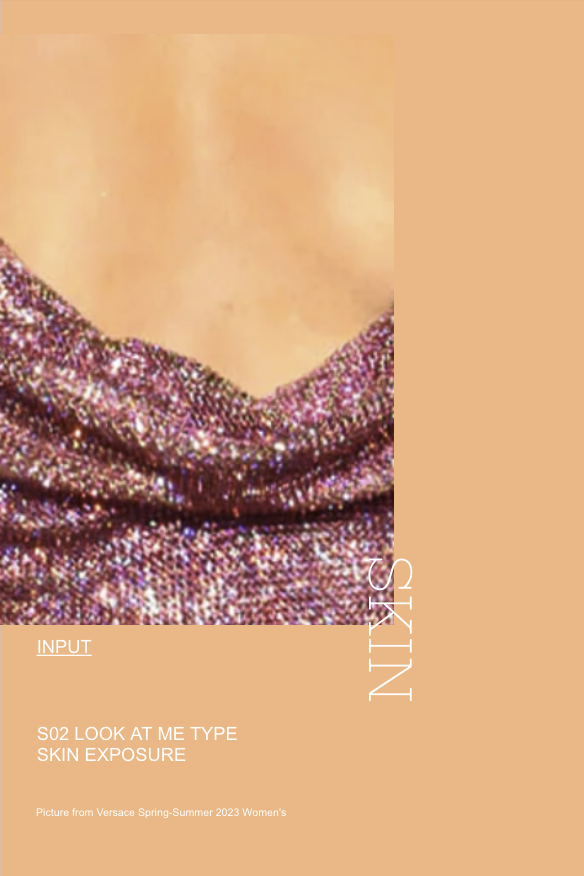

What if the glowing skin could trigger the camera?

What if the volume could trigger the camera?

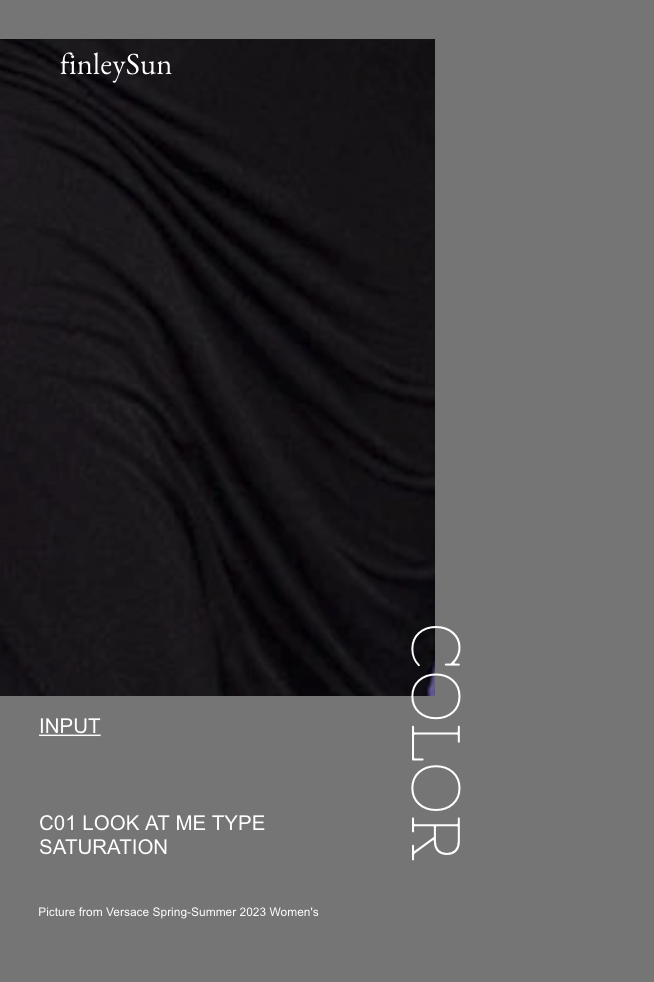

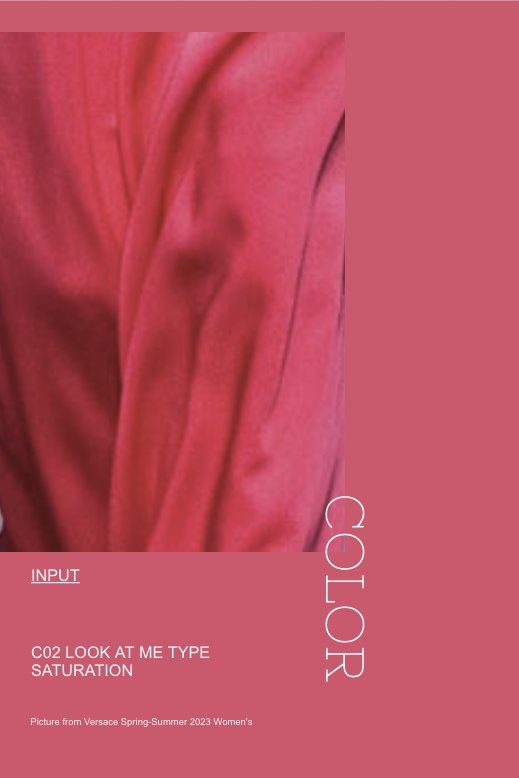

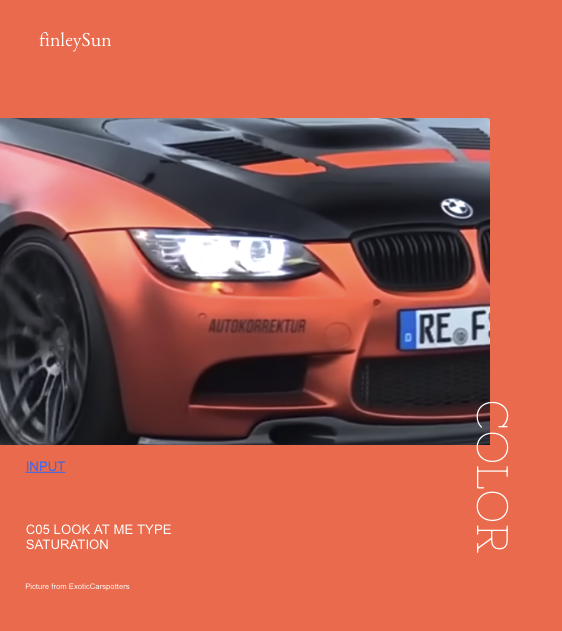

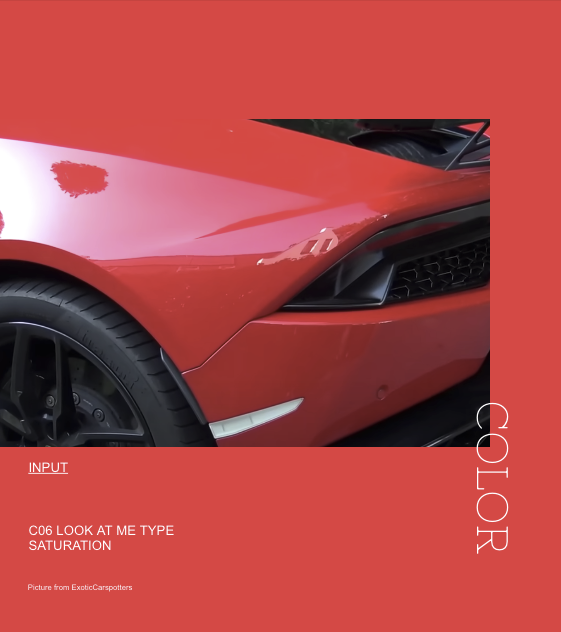

Color

Inspired by the Versace runway experience of Spring & Summer 2023 Women's, I have created a collection of colors that work to capture computational attention, whether in clothing or car design, through the use of vibrant hues.

Glow

What if the trigger is more trivial, but still interesting and worth archiving?

This particular dataset on skin is compelling and deserves to be elevated to another level.

Audio

Regarding sound datasets, I am particularly interested in those that capture friction sounds, whether produced by fabric, the human body, or machines.

As shown in the video, the camera is triggered through sound detection when the sound of denim is heard.

Takeaways

- Surveillance vs User Benefits

The current consent given by users is based on their outfits, the cars they drive, the radiance of their skin, and the sounds they make. This could be unethical to work without proper safeguards in place.

- Dataset Wise

The dataset on skin radiance is compelling. My next step is to delve deeper and develop a more tangible trigger.

- Storytelling Wise

In a short film, the protagonist uses an MR camera to gain followers and exposure, capturing beautiful yet fleeting moments. A touching story would help make my practices easy to understand.

The current consent given by users is based on their outfits, the cars they drive, the radiance of their skin, and the sounds they make. This could be unethical to work without proper safeguards in place.

- Dataset Wise

The dataset on skin radiance is compelling. My next step is to delve deeper and develop a more tangible trigger.

- Storytelling Wise

In a short film, the protagonist uses an MR camera to gain followers and exposure, capturing beautiful yet fleeting moments. A touching story would help make my practices easy to understand.

December, 2022